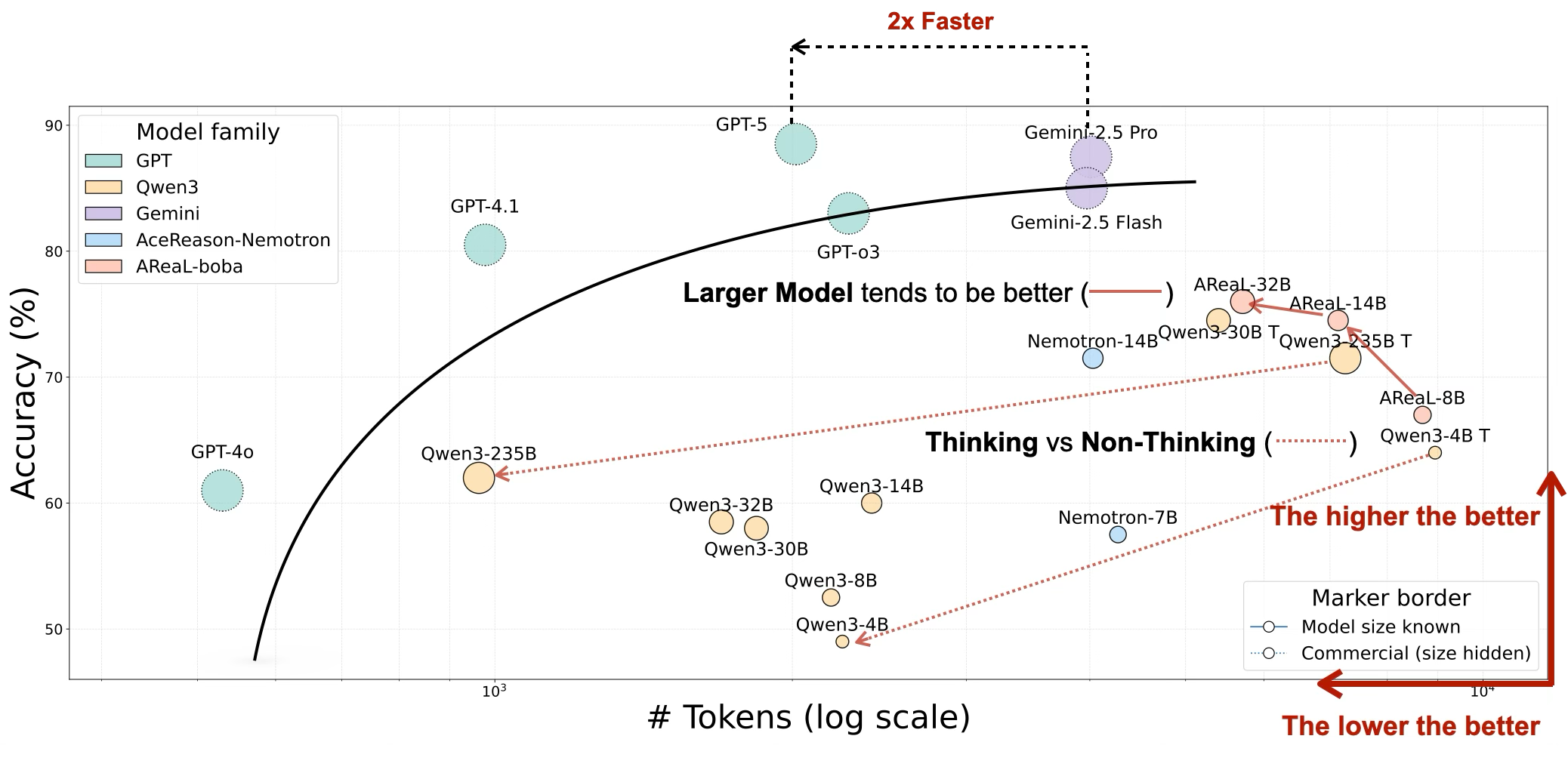

Performance of various LLMs on OckBench. Models are ranked by their reasoning efficiency, which is computed as #Tokens / Accuracy (lower is better). Click on the tabs to switch between Math and Coding domains.

OckBench

OckBench

"Entities must not be multiplied beyond necessity." — The Principle of Ockham's Razor

Large Language Models (LLMs) such as GPT-4, Claude 3, and Gemini have demonstrated remarkable capabilities in complex problem-solving, largely attributed to their advanced reasoning abilities. Techniques like Chain of Thought (CoT) prompting and self-reflection have become central to this success, enabling models to perform step-by-step deductions for tasks requiring deep knowledge and logical rigor. However, as the industry increasingly emphasizes this "long decoding" mode, the computational cost associated with these reasoning processes has grown significantly.

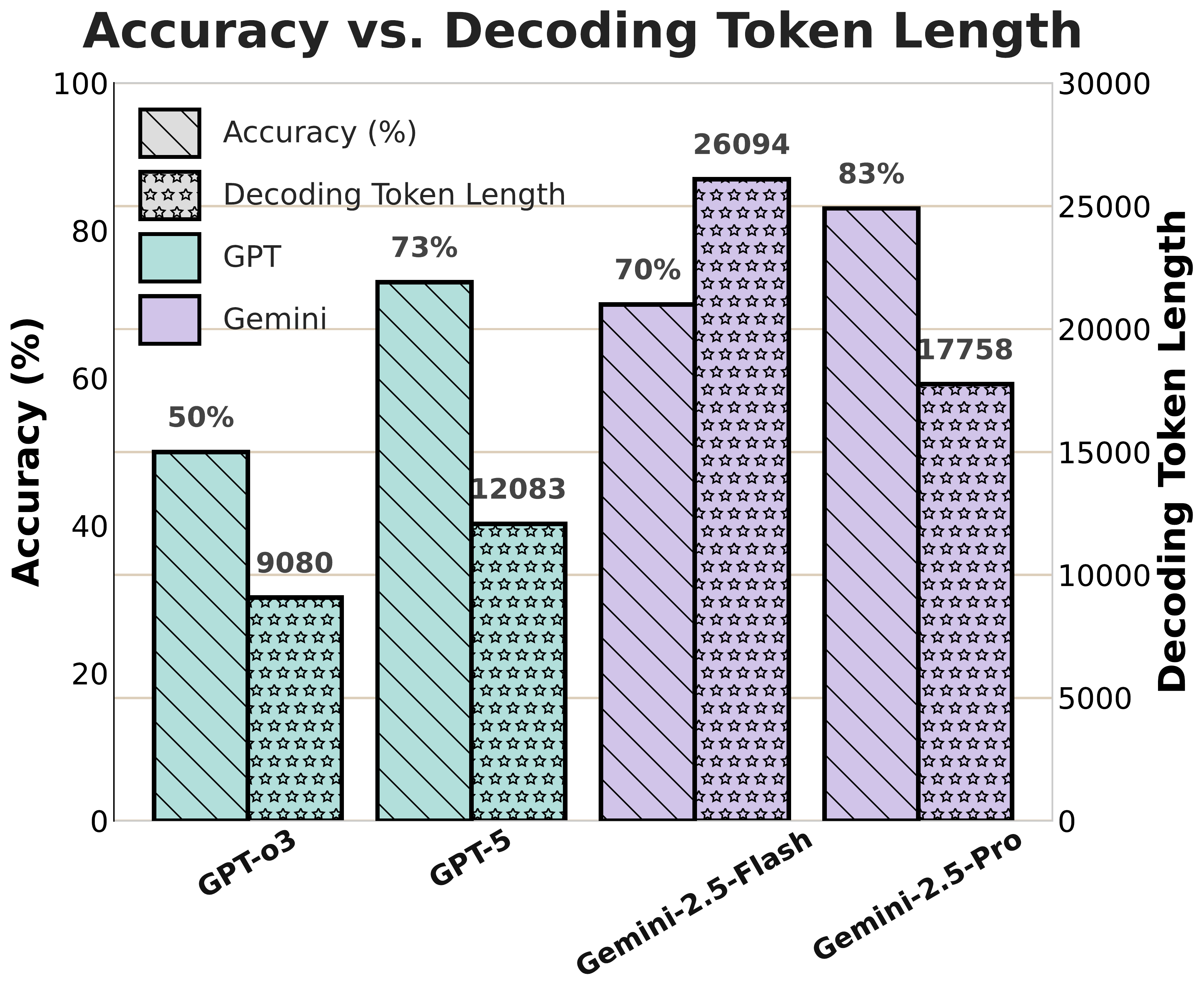

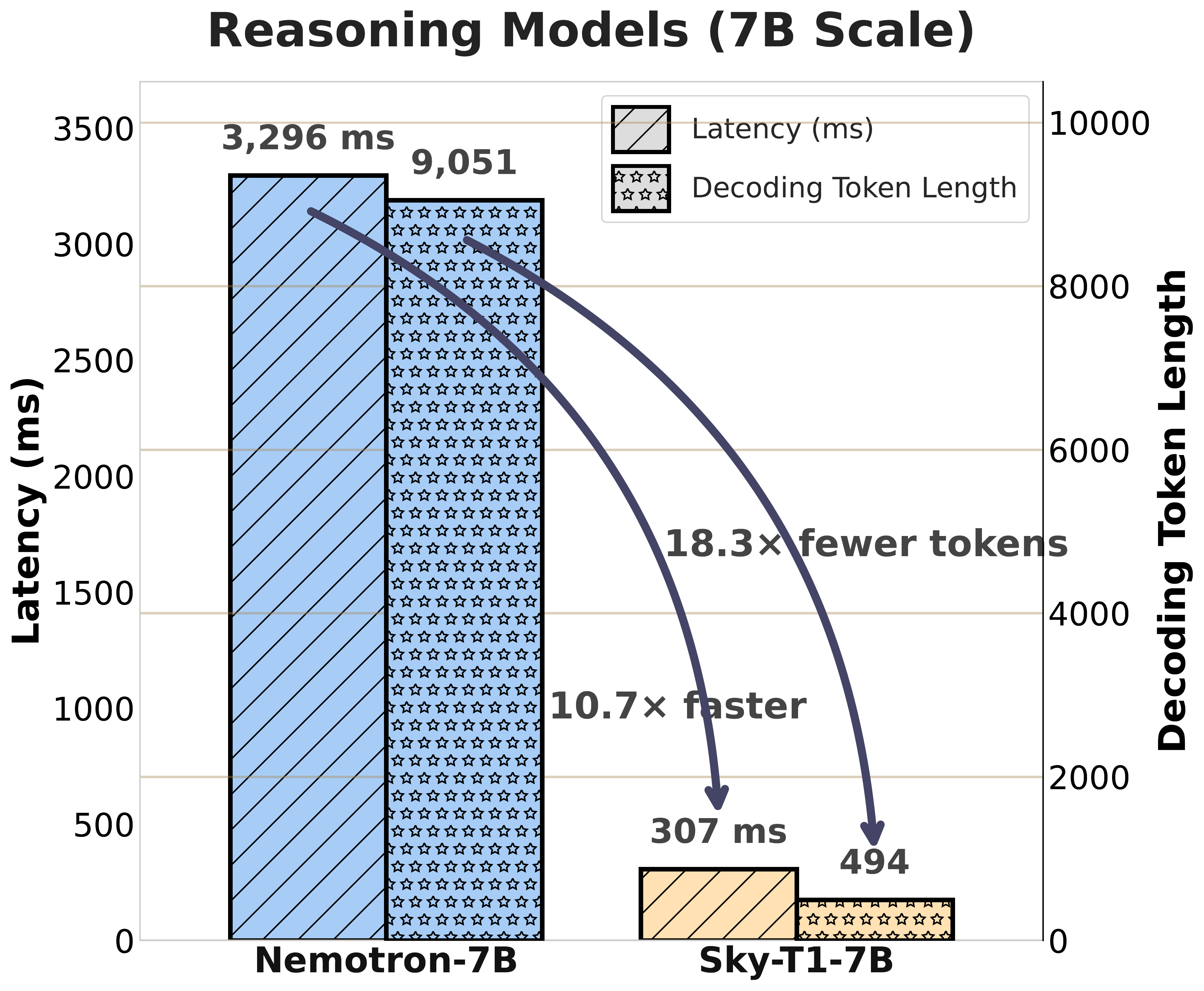

While LLM evaluation and comparison have become increasingly important, most evaluations focus primarily on accuracy while the efficiency of generation is less discussed. For example, HELM, LM-Eval, and the LMSYS Chatbot Arena rank models almost entirely on task accuracy. Yet in real systems, the difference between generating 10K tokens vs 100K tokens is non-trivial in latency, cost, and energy.

We introduce OckBench, the first model-agnostic, hardware-agnostic benchmark that jointly measures accuracy and decoding token count for reasoning and coding tasks. Our key contributions include:

Through experiments comparing multiple open- and closed-source models, we uncover that many models with comparable accuracy differ wildly in token consumption. For instance, among commercial models, one high-accuracy model required over 2× the tokens of another to achieve similar accuracy. This reveals that efficiency variance is a neglected but significant axis of differentiation in LLM evaluation.

Performance of various LLMs on OckBench. Models are ranked by their reasoning efficiency, which is computed as #Tokens / Accuracy (lower is better). Click on the tabs to switch between Math and Coding domains.

| # | Model | Category | #Tokens | Accuracy (%) | Reasoning Efficiency |

|---|---|---|---|---|---|

| 2 | GPT-4.1 OpenAI | Commercial | 1,349.88 | 59.00 | 152.1 |

| 3 | Qwen3-30B-A3B (thinking) Alibaba | Open Source | 4,139.33 | 83.00 | 138.1 |

| 4 | GPT-5 OpenAI | Commercial | 3,641.89 | 78.00 | 130.3 |

| 5 | Qwen3-235B-A22B (thinking) Alibaba | Open Source | 4,774.40 | 81.50 | 113.4 |

| 6 | Gemini-3 Pro | Commercial | 6,190.85 | 88.00 | 110.1 |

| 7 | GPT-4o OpenAI | Commercial | 570.17 | 38.50 | 100.1 |

| 8 | Qwen3-14B (thinking) Alibaba | Open Source | 5,084.48 | 79.00 | 97.0 |

| 9 | GPT-o3 OpenAI | Commercial | 3,513.90 | 69.50 | 95.5 |

| 10 | Qwen3-32B (thinking) Alibaba | Open Source | 4,797.89 | 76.50 | 93.3 |

| 11 | Gemini-2.5 Pro | Commercial | 6,392.96 | 83.00 | 89.4 |

| 12 | AReaL-boba-2-32B inclusionAI | Open Source | 5,387.96 | 78.00 | 88.1 |

| 13 | AReal-boba-2-14B inclusionAI | Open Source | 6,154.75 | 79.50 | 81.6 |

| 14 | Qwen3-8B (thinking) Alibaba | Open Source | 6,302.89 | 80.00 | 81.2 |

| 15 | AReal-boba-2-8B inclusionAI | Open Source | 7,026.88 | 79.00 | 70.2 |

| 16 | Qwen3-4B (thinking) Alibaba | Open Source | 6,478.45 | 72.00 | 57.6 |

| 17 | Gemini-2.5 Flash | Commercial | 7,984.58 | 74.50 | 51.8 |

| 18 | AceReason-Nemotron-7B NVIDIA | Open Source | 4,022.79 | 57.00 | 46.0 |

| 19 | AceReason-Nemotron-14B NVIDIA | Open Source | 4,147.26 | 56.50 | 43.5 |

| 20 | Qwen3-235B-A22B (non-thinking) Alibaba | Open Source | 980.29 | 34.50 | 41.9 |

| 21 | Qwen3-14B (non-thinking) Alibaba | Open Source | 2,421.85 | 43.50 | 34.0 |

| 22 | Qwen3-32B (non-thinking) Alibaba | Open Source | 1,713.91 | 36.00 | 27.2 |

| 24 | Qwen3-8B (non-thinking) Alibaba | Open Source | 2,081.88 | 29.00 | 11.7 |

| 25 | Qwen3-4B (non-thinking) Alibaba | Open Source | 2,262.83 | 27.50 | 9.2 |

| 26 | Qwen3-30B-A3B (non-thinking) Alibaba | Open Source | 1,852.56 | 25.00 | 8.4 |

OckBench provides comprehensive evaluation across multiple dimensions

OckBench is structured to test LLMs' reasoning efficiency across two complementary domains: mathematical problem solving and coding skills. To better expose token-efficiency differences, we select questions that exhibit high variance in decoding token usage among baseline models.

Sample problems from OckBench-Math and OckBench-Coding

These examples illustrate the types of problems where token efficiency varies significantly across models.

Question: A store sells notebooks for $3 each. If you buy more than 10, you get a 20% discount on the total price. How much would it cost to buy 15 notebooks?

Domain: Mathematics | Source: GSM8K

Token variance: Some models use 200 tokens, others use 2,000+ for the same answer.

Question: Find the number of ordered pairs (a,b) of integers such that a² + b² = 2024 and both a and b are positive.

Domain: Mathematics | Source: AIME 2024

Token variance: High variance across models due to different reasoning approaches.

Task: Write a function to find the longest common subsequence of two strings. For example, lcs("ABCDGH", "AEDFHR") should return 3 (the LCS is "ADH").

Domain: Coding | Source: MBPP variant

Token variance: Efficient models write concise code with brief explanations.

Planned Extension: We plan to extend OckBench to additional domains such as algorithmic challenges, debugging tasks, and code transformation problems to provide more comprehensive token efficiency evaluation.

Status: Coming in next version | Focus: Broader coverage

Stay tuned for expanded benchmark coverage across more reasoning domains!

If you find OckBench useful for your research, please cite our work

@inproceedings{du2025ockbench,

author = {Du, Zheng and Kang, Hao and Zhu, Ligeng and Han, Song and Krishna, Tushar},

title = {OckBench: Tokens are Not to Be Multiplied without Necessity},

booktitle = {NeurIPS 2025 Workshop on Efficient Reasoning},

year = {2025}

}