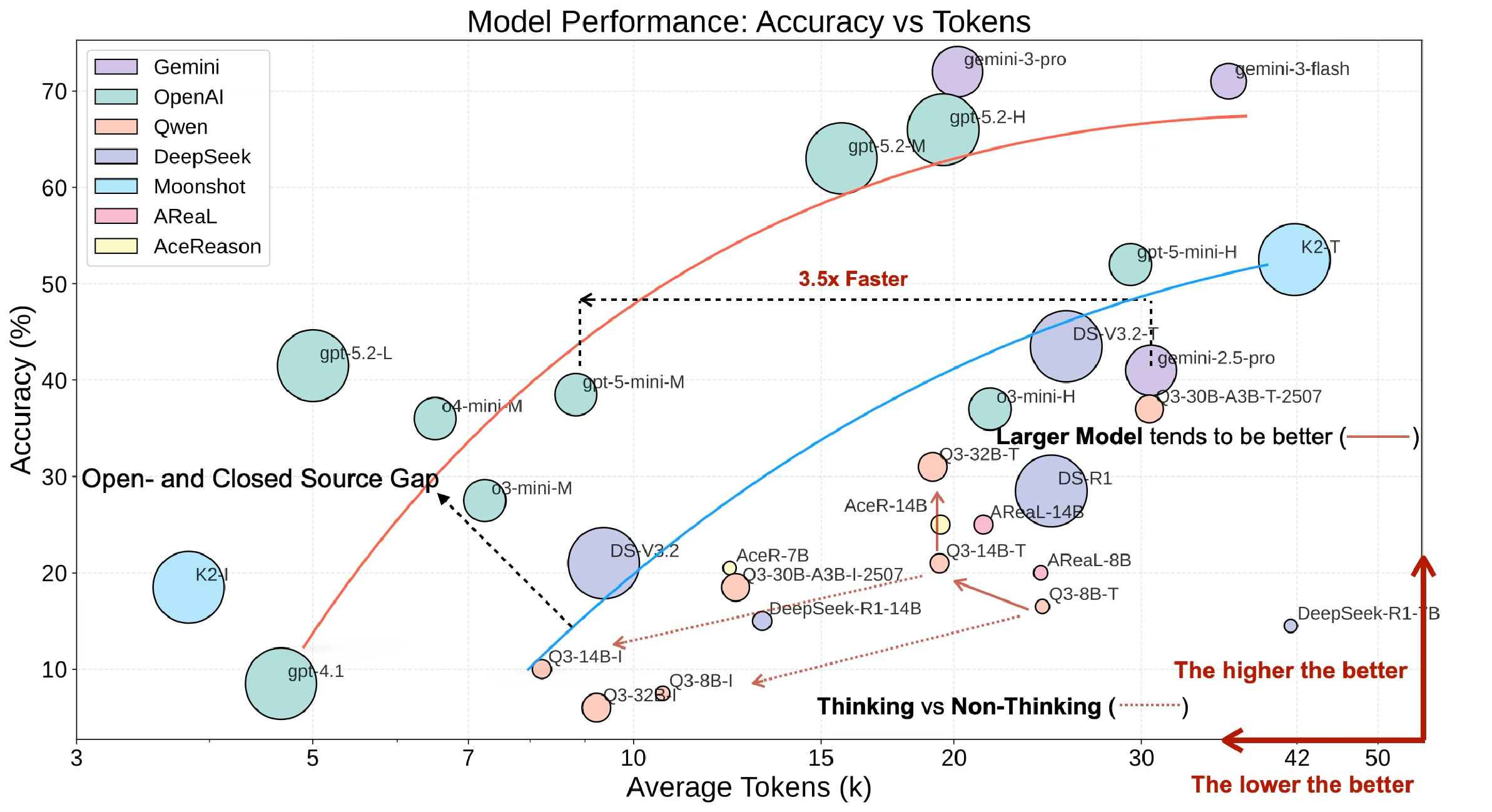

Performance of various LLMs on OckBench-Math (200 questions). Models are ranked by OckScore = Accuracy − 10 × log(Tokens / 10,000) — higher is better. All models evaluated with single-shot prompts and greedy decoding (temperature = 0).

OckBench

OckBench

"Entities must not be multiplied beyond necessity." — The Principle of Ockham's Razor

Large Language Models (LLMs) like GPT-5, Gemini 3, and Claude serve as the frontier of automated intelligence, fueled by reasoning techniques like Chain of Thought (CoT). As the field embraces test-time compute scaling, models are increasingly trained to generate extensive token chains to tackle tougher problems. However, this massive inflation of decoding tokens introduces a critical bottleneck: solving just six problems in the International Olympiad in Informatics can now take over ten hours, and complex mathematical challenges frequently explode into millions of tokens.

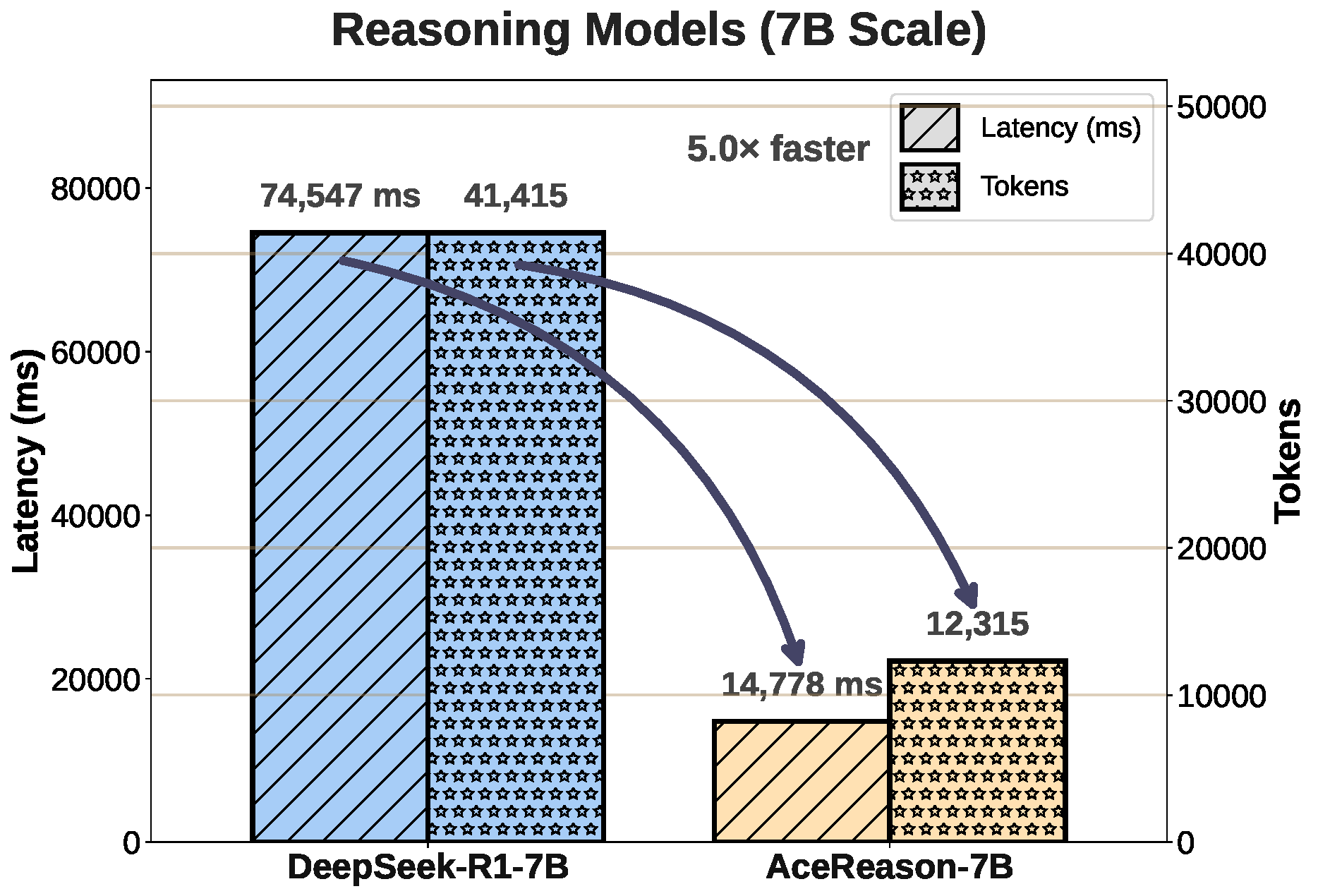

While the community celebrates gains in reasoning capability, prevailing benchmarks like HELM and Chatbot Arena focus almost exclusively on output quality, ignoring this token efficiency crisis. In reality, many models consume vastly more tokens than necessary to reach the correct answer. Models of identical size (7B) achieving similar accuracy can differ by over 3.4× in token consumption and 5.0× in end-to-end latency. As accuracy on standard tasks approaches saturation, tokens must be treated as a cost — not a free resource.

We introduce OckBench, the first model- and hardware-agnostic benchmark that jointly measures accuracy and token efficiency. Our key contributions include:

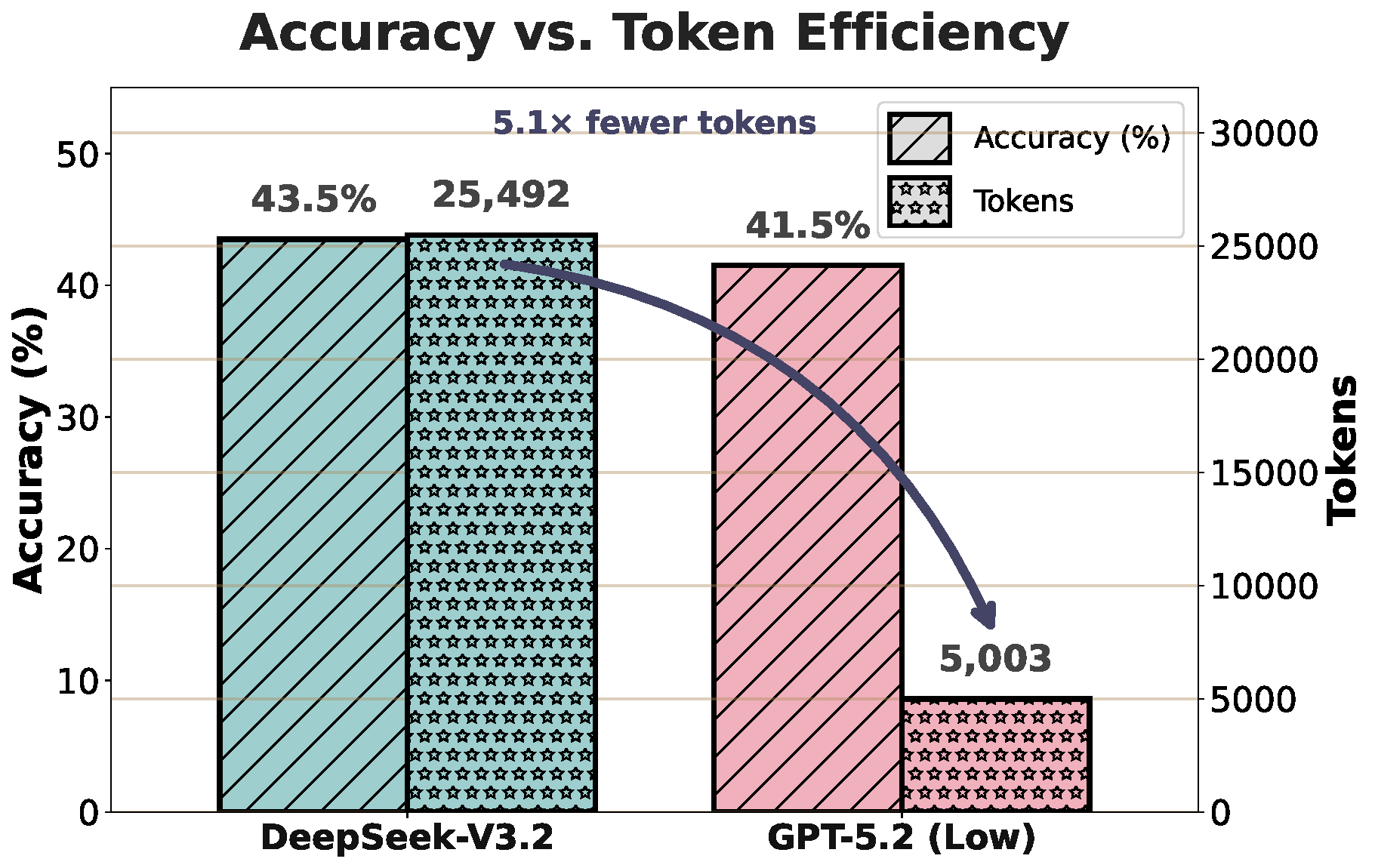

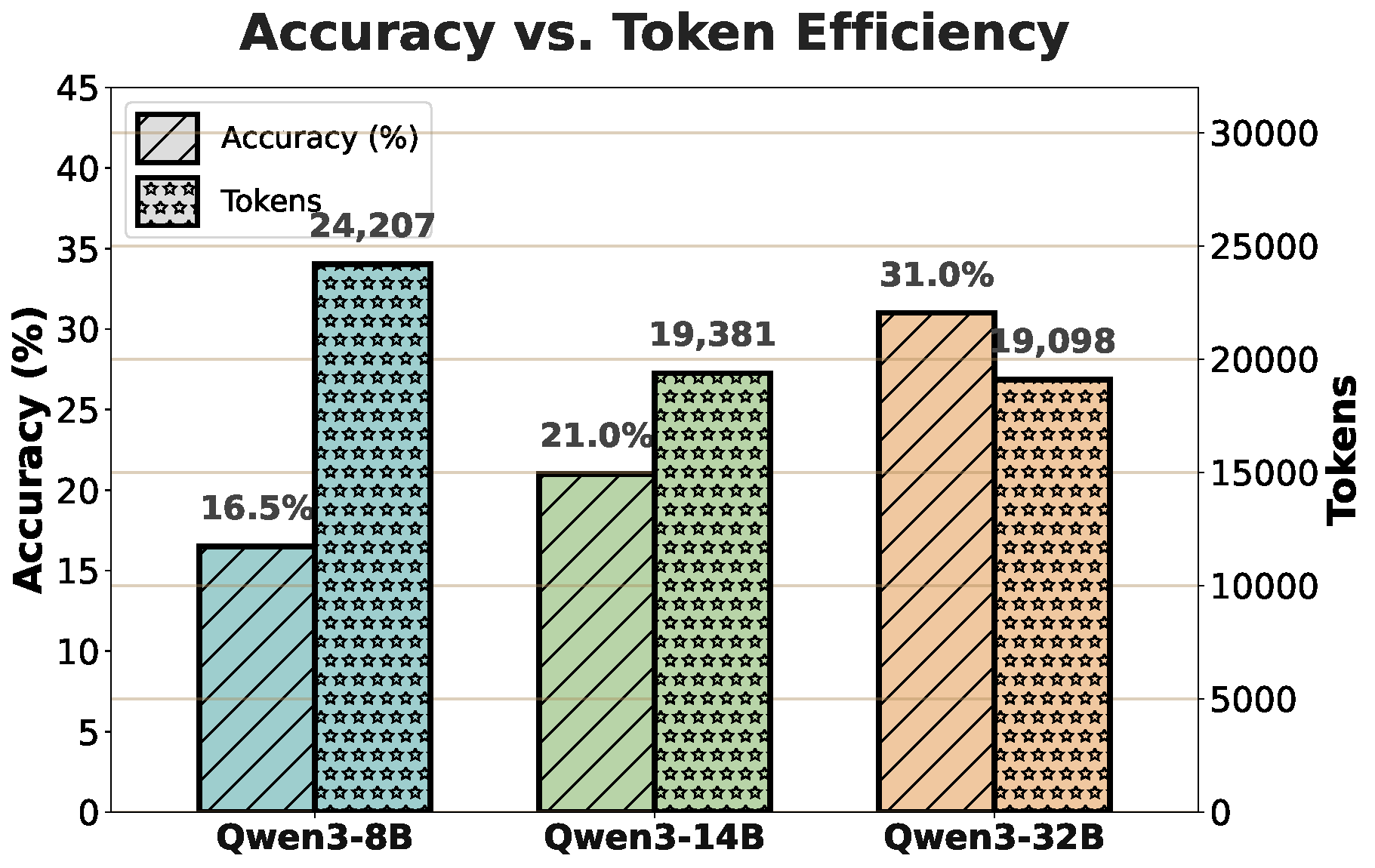

Through extensive evaluations on 50 frontier and open-source models, we find that top open-source models have nearly closed the accuracy gap but consume up to 5.1× more tokens than commercial counterparts for comparable accuracy. Meanwhile, frontier commercial models are rapidly co-optimizing both dimensions, validating Per-Token Intelligence as the next key axis of LLM evaluation.

Performance of various LLMs on OckBench-Math (200 questions). Models are ranked by OckScore = Accuracy − 10 × log(Tokens / 10,000) — higher is better. All models evaluated with single-shot prompts and greedy decoding (temperature = 0).

| # | Model | Category | Avg Tokens | Accuracy (%) | OckScore ↑ |

|---|

OckBench provides comprehensive evaluation across multiple dimensions

OckBench aggregates tasks across three complementary reasoning domains. Rather than random sampling, we apply the Differentiation Filter: selecting problems where accuracy across models falls within 10%–90% (avoiding floor/ceiling effects) and token variance is maximized — isolating instances that reveal intrinsic efficiency differences.

Sample problems from OckBench-Math and OckBench-Coding

These examples illustrate the types of problems where token efficiency varies significantly across models.

Question: A store sells notebooks for $3 each. If you buy more than 10, you get a 20% discount on the total price. How much would it cost to buy 15 notebooks?

Domain: Mathematics | Source: GSM8K

$3 × 15 × 0.8 = $36 — a 3-second mental calculation. Yet some reasoning models spend 2,000+ tokens setting up formal equations, double-checking edge cases, and re-reading the problem before arriving at the obvious answer.

Question: Find the number of ordered pairs (a,b) of integers such that a² + b² = 2024 and both a and b are positive.

Domain: Mathematics | Source: AIME 2024

Efficient models notice 2024 = 4 × 506 and apply modular arithmetic to eliminate large search spaces in a few steps. Verbose models enumerate all 44² candidate pairs one by one — correct eventually, but at 10× the token cost.

Task: Write a function to find the longest common subsequence of two strings. For example, lcs("ABCDGH", "AEDFHR") should return 3 (the LCS is "ADH").

Domain: Coding | Source: MBPP variant

A clean DP solution is ~10 lines. But many models first write a recursive solution, identify the redundancy, rewrite with memoization, then pivot to bottom-up — producing correct code buried under 800 tokens of self-tutoring.

Question: A molecule undergoes a photochemical reaction in which it absorbs a photon and transitions to an excited state. If the excited state has a lifetime of 10 ns, what is the natural linewidth (in Hz) of the corresponding spectral line?

Domain: Scientific Reasoning | Source: GPQA-Diamond

Efficient models recall Δν = 1/(2πτ) and plug in τ = 10 ns for an instant answer. Overthinking models re-derive the time-energy uncertainty relation from first principles — impressively thorough, but it's a textbook formula lookup.

If you find OckBench useful for your research, please cite our work

@article{du2025ockbench,

title={OckBench: Measuring the Efficiency of LLM Reasoning},

author={Du, Zheng and Kang, Hao and Han, Song and Krishna, Tushar and Zhu, Ligeng},

journal={arXiv preprint arXiv:2511.05722},

year={2025}

}